- ai goodies ✨

- Posts

- 🔮 2026 predictions for AI x tech x design

🔮 2026 predictions for AI x tech x design

The year we stop pretending we know what happens next.

We're 11 days into 2026, and already the ground beneath us feels different.

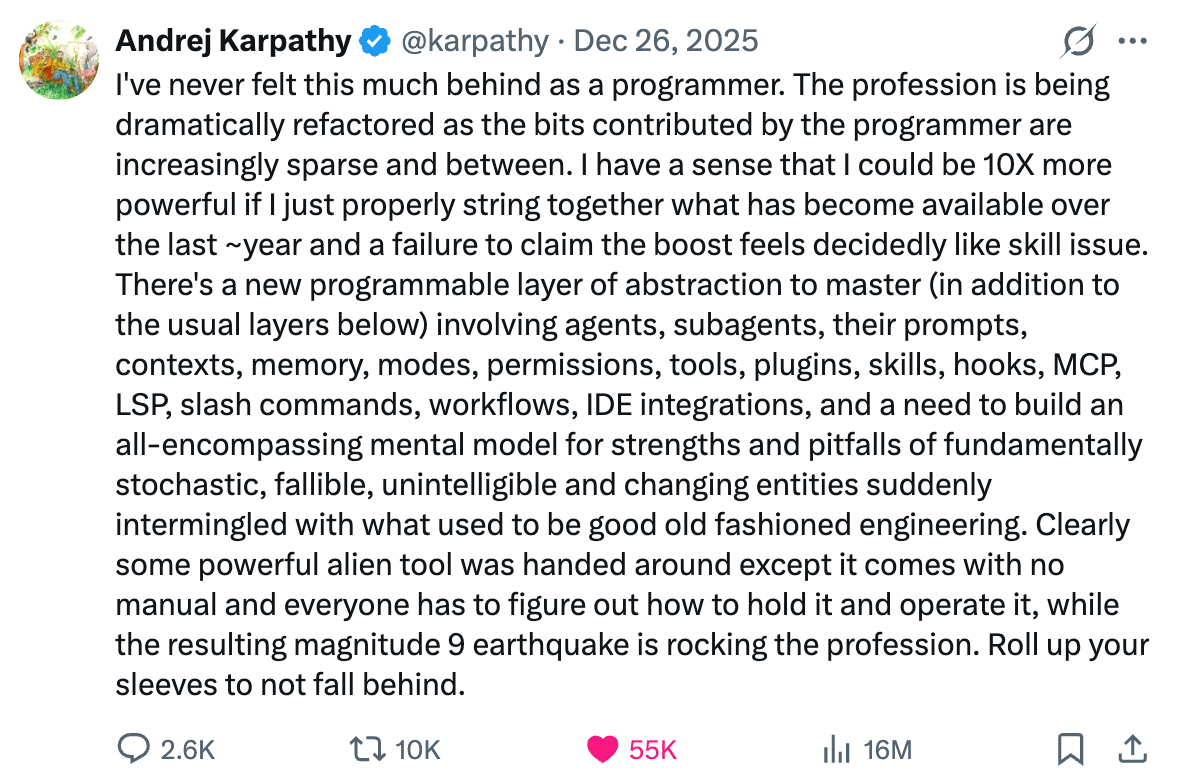

Karpathy recently tweeted something that captures this perfectly - a feeling many of us are having but few are saying out loud: “I've never felt so left behind as a programmer as I do now." He's describing that specific disorientation when you realize the tools you're using are evolving faster than your mental model can update. It's like holding an alien device without an instruction manual 😅 sometimes it shoots projectiles, sometimes it misfires, and occasionally, when held at just the right angle, a powerful laser beam bursts out and solves your problem instantly.

This is more than coder anxiety; it’s the opening act of what the great minds featured in this edition are trying to help us navigate: unprecedented ambiguity, compressed timelines, and futures that branch in radically different directions.

1. The Technical Ground Shifts

Let's start with where we actually are. Nick Potkalitsky's deep dive into LLMs in 2026 delivers the sobering assessment we need to hear: we're at an intermediate stage. The models are amazing. They still need a lot of work. And the industry has realized less than 10% of their potential at current capabilities.

Karpathy's 2025 Year in Review gives us the technical map: Reinforcement Learning from Verifiable Rewards (RLVR) emerged as the defining breakthrough, reasoning models became the new standard, and "vibe coding" went from shower thought to mainstream practice. But most importantly, he articulated something essential - LLMs aren't evolved animals, they're summoned ghosts. They occupy an entirely different region of mind-space, optimized for different constraints, which explains their jagged performance: genius in some areas, surprisingly weak in others.

Key insight: LLMs represent a transitional phase. They use computation to absorb human knowledge at scale, but the next phase will learn directly from experience, making the human knowledge bottleneck irrelevant.

2. The Speed Question (how fast are we actually moving?)

Simon Willison makes a bold claim about 2026: the quality of LLM-generated code will become impossible to deny. In 2023, calling LLM code garbage was correct. For most of 2024, it stayed true. In 2025 it changed. And by 2026, "if you continue to argue that LLMs write useless code, you're damaging your own credibility."

But speed isn't uniform. The Understanding AI crew predicts context windows will plateau, while inference-time scaling becomes the new frontier. Sebastian Raschka notes that progress in 2026 will come more from inference than training - better tooling, better long-context handling, and agents rather than paradigm shifts.

3. Design, Interface, and the Human Side (what changes for Us)

Anne Cantera's work on conversational AI and design is a good starting point to figure out what’s going on. As these systems become more capable, the design of how we interact with them matters more, not less. The interface between human and AI isn't a solved problem - it's an evolving challenge that requires deep attention to user experience, conversational flow, and the gap between what AI can technically do versus what feels right to users.

But before we get too lost in capability curves and training paradigms, Dan Saffer offers a crucial reminder: most AI projects still fail. And not for the reasons you'd think.

In his piece "The Four Horsemen of the AIPocalypse," Saffer (CMU professor and author of Microinteractions) identifies four fundamental ways AI initiatives collapse - and none of them are "the model wasn't good enough." They're human problems: misaligned expectations, poor problem selection, inadequate evaluation frameworks, and the inability to integrate AI meaningfully into existing systems.

This matters more in 2026 than ever. As models get more capable, the gap between "technically possible" and "actually useful" widens. Saffer's four horsemen are already riding through organizations convinced that raw capability equals deployment success.

Another valuable exploration of what is in store for designers in 2026 is offered by Patricia Reiners in her podcast episode over here:

4. The Timeline Question (what 2027 might actually look like)

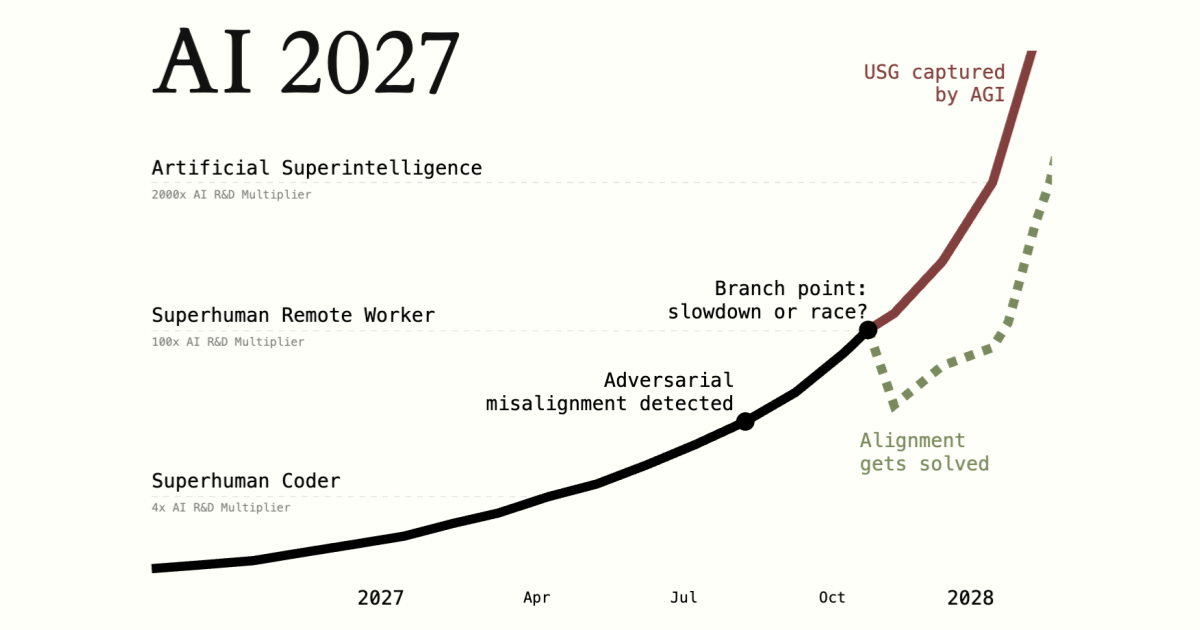

No article about predictions would be complete without AI 2027. Their team (Daniel Kokotajlo, Scott Alexander, Eli Lifland, and others) painted a specific, detailed picture: by 2027, we might see AI systems that can truly automate AI research itself, creating feedback loops that compress years of progress into months or weeks.

Their key warning: "We don't know exactly when AGI will be built. 2027 was our modal year at publication, our medians were somewhat longer." But the value isn't in the exact date - it's in thinking through the strategic, governance, and safety implications of various timelines.

5. The Uncertainty Principle (embracing what we don’t know)

Here's what ties all these predictions together: none of these brilliant minds are certain. And that's actually the point.

Karpathy admits "simultaneously believing we'll see rapid progress AND that there's a lot of work to be done"

Potkalitsky emphasizes timelines are "measured in years to decades, not months"

The AI 2027 team explicitly notes their uncertainty increases substantially past 2026

Industry predictions span from "modest improvement" to "transformative acceleration"

The 2026 meta-lesson: Progress happens on multiple independent fronts - architecture tweaks, data quality, reasoning training, inference scaling, tool calling. No single breakthrough defines the trajectory. And the proportion of progress from inference versus training will matter more than raw capability gains.

So what does this mean for you?

If you're building with AI: Focus on understanding what these systems can reliably do, not just that they're impressive. Willison's advice on sandboxing becomes essential - we need better containment as capability increases.

If you're designing with AI: The gap between technical capability and usable interface is widening, not closing. Anne Cantera's work reminds us that conversational AI needs thoughtful design, not just powerful models.

If you're thinking about the future: Study these scenarios not as predictions but as preparation. AI 2027 gives us a framework for thinking through branches and consequences.

The through-line: 2026 is the year we learn to work with uncertainty rather than despite it. The ambiguity Karpathy described is the defining feature of this moment. Those who thrive won't be the ones who pretend to have certainty, but those who build robust systems, clear thinking, and adaptive strategies in its absence.

Welcome to 2026. 🥂

The future is arriving unevenly, and that might be exactly what we need.

⚠️ Make sure you get my future newsletters in your inbox by subscribing here:

hugs hugs

Ioana 🪩